From Scatological Data To Engaging Audio: An AI's "Poop" Podcast Creation

Table of Contents

Data Acquisition and Processing: The "Poop" Data Pipeline

Creating an AI-powered poop podcast requires a robust data pipeline. This involves sourcing, cleaning, and transforming raw data into a format usable by AI models.

Sourcing Scatological Data: The Foundation of Our "Poop Podcast"

Sourcing scatological data presents unique challenges. Ethical considerations are paramount. We must ensure data is anonymized and collected ethically, adhering to strict privacy regulations. Possible sources include:

- Anonymized human health data: Data from clinical studies, focusing on microbiome analysis and fecal matter composition, can provide valuable insights, provided all necessary ethical and privacy protocols are followed.

- Animal studies: Research involving animal fecal samples offers another avenue, providing a less sensitive data source for initial experimentation and model development. This must be conducted with the proper ethical review and approvals.

- Publicly available datasets (with appropriate anonymization): While less common for this specific type of data, exploring existing public datasets and adapting them to the AI model's requirements can be a valuable starting point.

Data cleaning is crucial. We need to handle missing values, outliers, and inconsistencies to ensure the accuracy and reliability of the AI's analysis. Specific data types include:

- Microbial composition: Analyzing the types and abundance of bacteria in fecal samples.

- Macromolecule concentrations: Assessing levels of proteins, carbohydrates, and fats.

- Metabolite profiles: Identifying specific byproducts of digestion and metabolism.

Data Transformation and Feature Engineering: Preparing for AI Analysis

Raw scatological data needs transformation before it can be used by an AI. This process involves:

- Data normalization: Scaling data to a common range, preventing features with larger values from dominating the analysis.

- Feature extraction: Deriving new features from existing ones to better represent the data's underlying patterns. For example, we might create a composite score indicating gut health based on multiple microbial indicators.

- Dimensionality reduction: Reducing the number of features while preserving important information, improving model efficiency and preventing overfitting.

Handling noisy or incomplete data is another significant challenge. Techniques like imputation (filling in missing values) and smoothing can help mitigate these issues.

AI-Powered Podcast Script Generation: Turning Data into Narrative

Once the data is processed, we use AI to generate the podcast script.

Choosing the Right AI Model: The Engine of Our "Poop Podcast"

Several AI models are suitable for this task:

- Large language models (LLMs): Models like GPT-3 or similar can generate human-quality text based on the provided data features and narrative prompts. They excel at creating engaging and coherent stories.

- Generative adversarial networks (GANs): GANs can create diverse and creative scripts, but require careful training and may need additional fine-tuning to ensure factual accuracy.

Choosing the right model depends on several factors, including the complexity of the narrative, the desired level of creativity, and the computational resources available. Model training and fine-tuning are crucial for adapting the chosen AI to the specific characteristics of the scatological data and the desired podcast style.

Crafting a Narrative from Scatological Data: From "Poop" to Podcast

The AI interprets the data and translates it into a compelling narrative. This might involve:

- Storytelling techniques: Structuring the podcast around a central theme, using characters (perhaps personified gut bacteria!), and creating plot arcs.

- Data visualization: Presenting data insights in an engaging and accessible way, such as through sound effects or analogies.

- Fact-checking and scientific accuracy: Ensuring all information presented is accurate and based on reliable scientific evidence.

This stage requires careful consideration of how to present complex scientific information in a clear, engaging, and accessible manner suitable for a broad audience. Maintaining listener engagement is key to the success of any "poop podcast."

Audio Production and Post-Processing: Refining the "Poop Podcast"

The final stage focuses on transforming the generated script into a high-quality audio product.

Text-to-Speech Conversion: Giving Voice to Our Data

Text-to-speech (TTS) technology converts the script into audio. Several options exist, each with pros and cons:

- Cloud-based TTS engines: Services like Amazon Polly or Google Cloud Text-to-Speech offer various voices and are scalable.

- Desktop TTS software: Software solutions provide more control but may require more technical expertise.

Choosing the right engine depends on budget, voice quality requirements, and the desired level of customization. Enhancing audio quality through noise reduction and audio mastering is also crucial for a professional-sounding podcast.

Adding Sound Effects and Music: Enhancing the "Poop Podcast" Experience

Sound design plays a crucial role in listener engagement. Strategic use of:

- Sound effects: Can enhance the narrative by adding emphasis, creating atmosphere, or illustrating specific points.

- Royalty-free music: Provides background music that complements the tone and mood of the podcast without copyright infringement.

Careful selection and integration of audio elements create a more immersive and memorable listening experience.

Conclusion: The Future of "Poop Podcasts" and Data-Driven Audio

Creating an AI-powered "poop podcast" involves a multi-step process, from ethical data acquisition and sophisticated AI model selection to careful audio production. This unconventional approach demonstrates the power of AI to transform seemingly mundane data into engaging and informative audio content. The unique insights gleaned from scatological data offer a new perspective on familiar topics, highlighting the potential of this technology to uncover unexpected stories within diverse datasets.

We encourage you to explore the exciting possibilities of AI-powered podcast creation. Experiment with different data sources and AI models; perhaps your next project will be a groundbreaking "poop podcast" – or a data-driven podcast on an entirely different, equally intriguing subject! Explore resources like [link to relevant AI tools], [link to podcasting resources], and [link to ethical data handling guidelines] to embark on your journey into the world of AI and podcasting.

Featured Posts

-

Trump Administrations Influence On European Ai Regulations

Apr 26, 2025

Trump Administrations Influence On European Ai Regulations

Apr 26, 2025 -

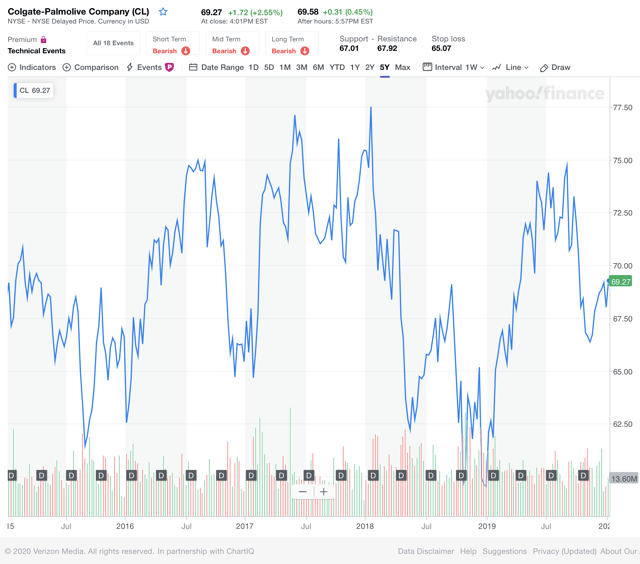

Colgates Cl Financial Performance Suffers From 200 Million Tariff Burden

Apr 26, 2025

Colgates Cl Financial Performance Suffers From 200 Million Tariff Burden

Apr 26, 2025 -

Blue Origins Rocket Launch Halted By Vehicle Subsystem Problem

Apr 26, 2025

Blue Origins Rocket Launch Halted By Vehicle Subsystem Problem

Apr 26, 2025 -

American Battleground Taking On A Billionaire The Inside Story

Apr 26, 2025

American Battleground Taking On A Billionaire The Inside Story

Apr 26, 2025 -

Open Ais Chat Gpt Under Ftc Scrutiny Privacy And Data Concerns

Apr 26, 2025

Open Ais Chat Gpt Under Ftc Scrutiny Privacy And Data Concerns

Apr 26, 2025